What is a Generative Pre-Trained Transformer?

A Generative Pre-Trained Transformer, commonly known as GPT, is a type of artificial intelligence model developed for natural language processing. GPT is designed to understand and generate human-like text, which allows it to be utilized in various applications, including chatbots, content creation, and more.

How Generative Pre-Trained Transformers Work

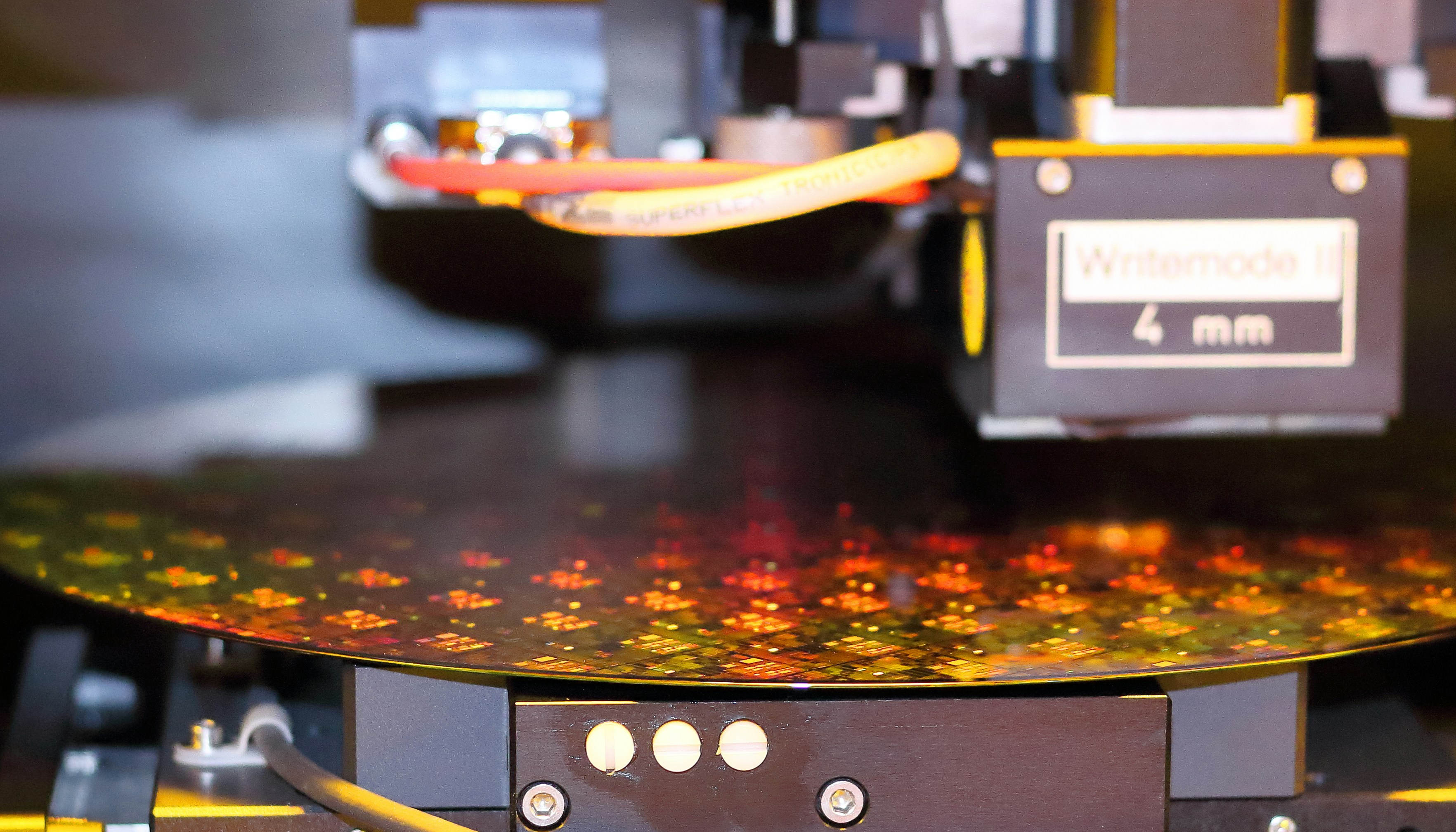

The functionality of generative pre-trained transformers hinges on their training structure. Initially, they undergo unsupervised learning from vast datasets to acquire language patterns and contextual information. This pre-training phase equips the model with a comprehensive grasp of language. Following this, fine-tuning can be conducted on specific tasks, allowing the model to adapt and perform optimally in various contexts.

Applications of Generative Pre-Trained Transformers

Generative pre-trained transformers have found a wide range of applications. From writing essays and articles to creating conversational agents, the potentials are substantial. Industries in content creation, customer support, and education are leveraging GPT technology to enhance efficiency and user experience, bringing innovation to their respective fields.